The Rise of AI Model Distillation

AI distillation gained widespread recognition in January 2025 with the introduction of a cost-effective AI model by DeepSeek, a Chinese AI research company. This model reportedly required significantly less computing power compared to previous LLMs developed by other AI research entities like OpenAI and major hyperscalers. Although the benchmarks for DeepSeek’s model are still under debate, its release marked a pivotal moment in the AI industry.

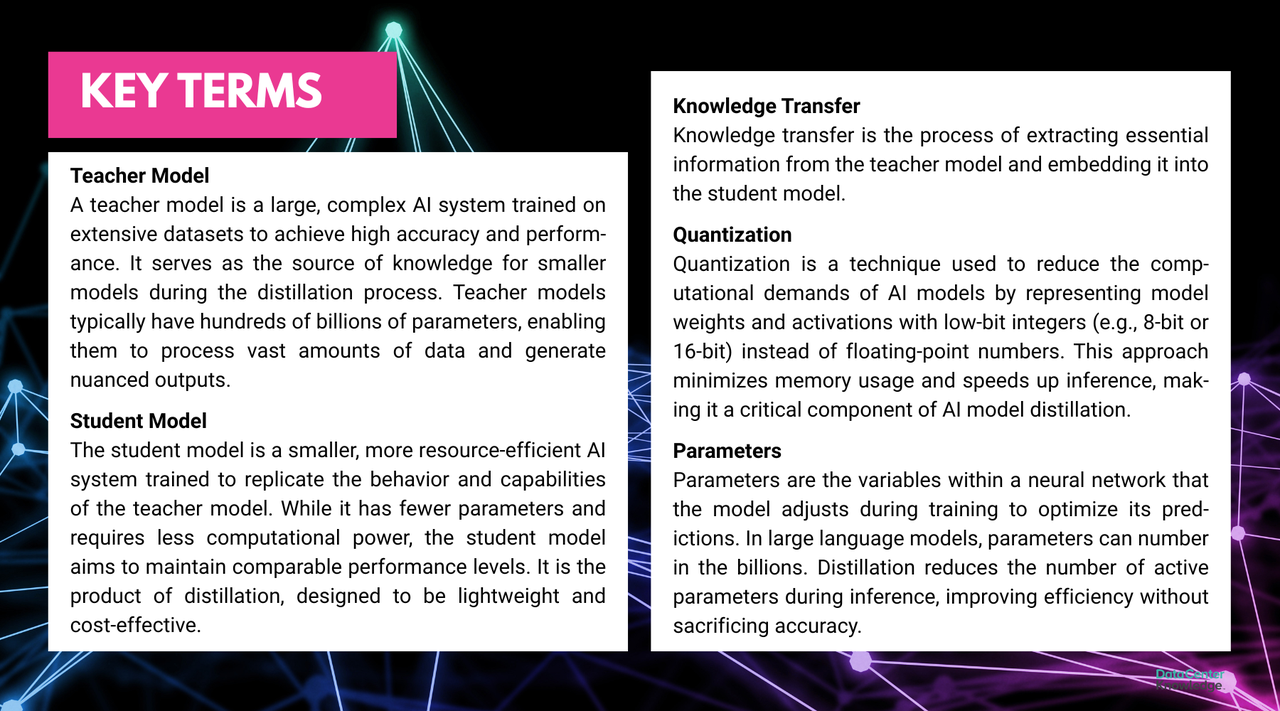

Key AI model distillation terms include teacher model, student model, knowledge transfer, and quantization. Image: DCN.

DeepSeek’s engineers employed a range of techniques to develop a cost-effective AI model, including reducing floating-point precision and optimizing Nvidia GPU instruction set architecture manually. Central to their methodology was AI model distillation, inspired by various software architecture principles that prioritize efficiency.